Finding the Words to Say: Hidden State Visualizations for Language Models

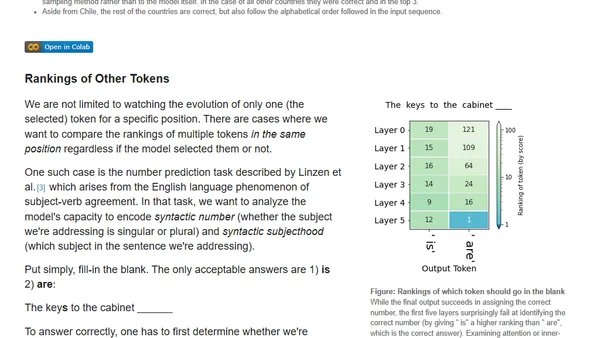

Explores visualizing hidden states in Transformer language models to understand their internal decision-making process during text generation.

Explores visualizing hidden states in Transformer language models to understand their internal decision-making process during text generation.

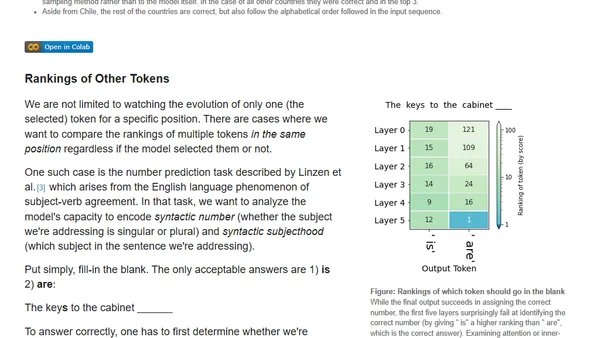

Explores methods for controlling attributes like topic and style in neural text generation using decoding strategies, prompt design, and fine-tuning.

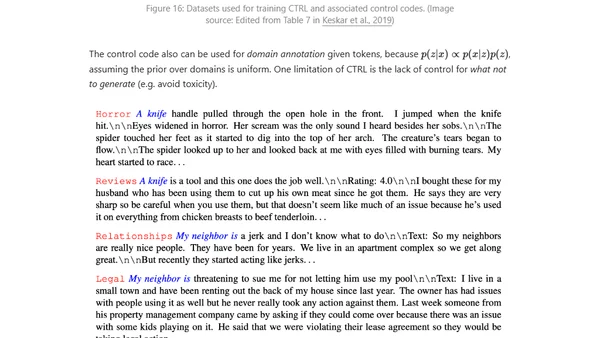

Explores interactive methods for interpreting transformer language models, focusing on input saliency and neuron activation analysis.

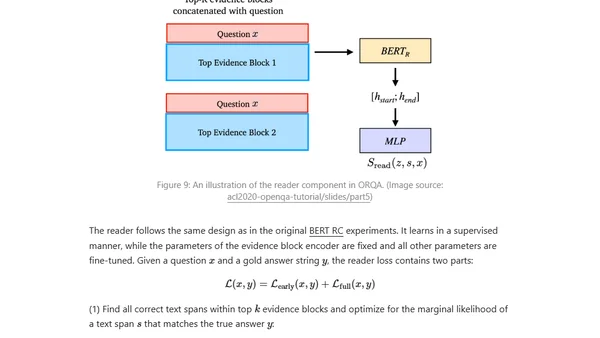

A technical overview of approaches for building open-domain question answering systems using pretrained language models and neural networks.

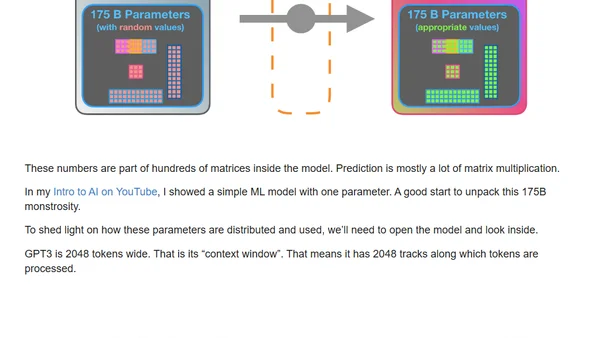

A visual guide explaining how GPT-3 is trained and generates text, breaking down its transformer architecture and massive scale.

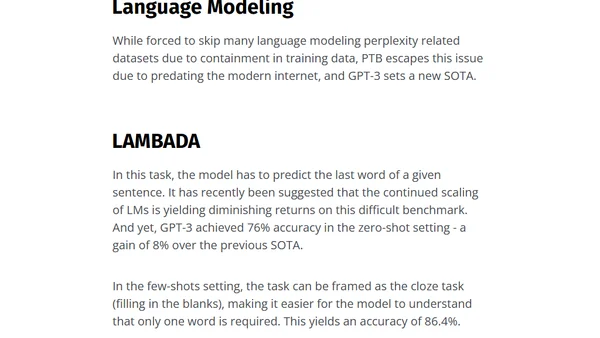

An analysis of OpenAI's GPT-3 language model, focusing on its 175B parameters, in-context learning capabilities, and performance on NLP tasks.

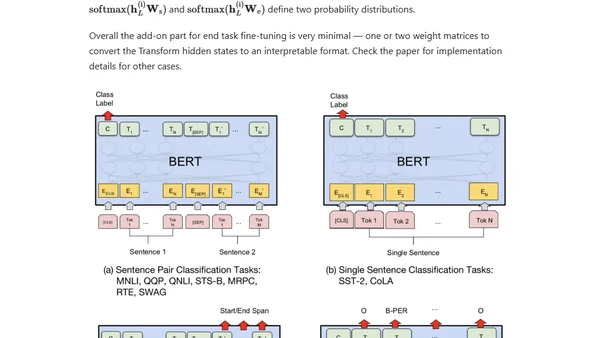

A technical overview of the evolution of large-scale pre-trained language models like BERT, GPT, and T5, focusing on contextual embeddings and transfer learning in NLP.

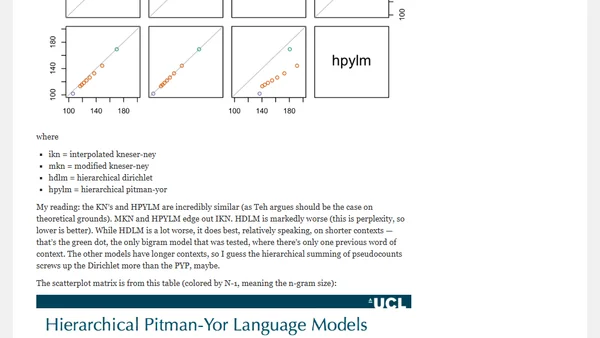

A scatterplot analysis comparing perplexity results of Kneser-Ney, hierarchical Pitman-Yor, and hierarchical Dirichlet language models from a research paper.