Understanding Parameter-Efficient Finetuning of Large Language Models: From Prefix Tuning to LLaMA-Adapters

A guide to parameter-efficient finetuning methods for large language models, covering techniques like prefix tuning and LLaMA-Adapters.

SebastianRaschka.com is the personal blog of Sebastian Raschka, PhD, an LLM research engineer whose work bridges academia and industry in AI and machine learning. On his blog and notes section he publishes deep, well-documented articles on topics such as LLMs (large language models), reasoning models, machine learning in Python, neural networks, data science workflows, and deep learning architecture. Recent posts explore advanced themes like “reasoning LLMs”, comparisons of modern open-weight transformer architectures, and guides for building, training, or analyzing neural networks and model internals.

103 articles from this blog

A guide to parameter-efficient finetuning methods for large language models, covering techniques like prefix tuning and LLaMA-Adapters.

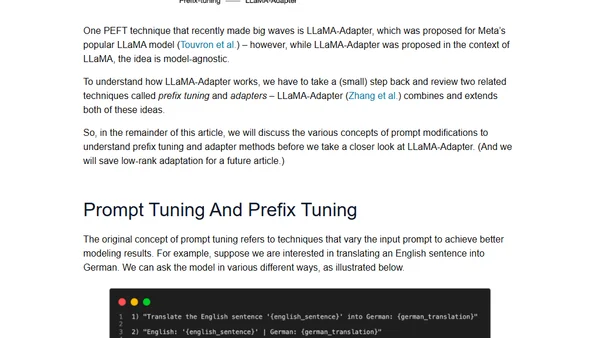

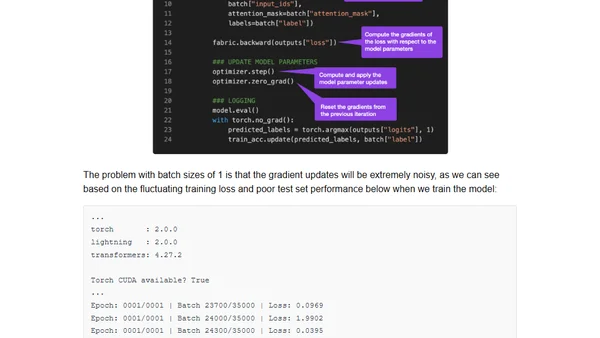

Guide to finetuning large language models on a single GPU using gradient accumulation to overcome memory limitations.

A guide on managing the flood of AI and machine learning research, covering tools and strategies for prioritizing papers and news.

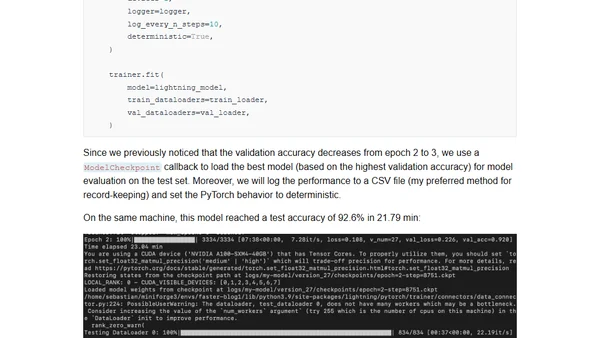

Learn techniques to speed up PyTorch model training by 8x using PyTorch Lightning, maintaining accuracy while reducing training time.

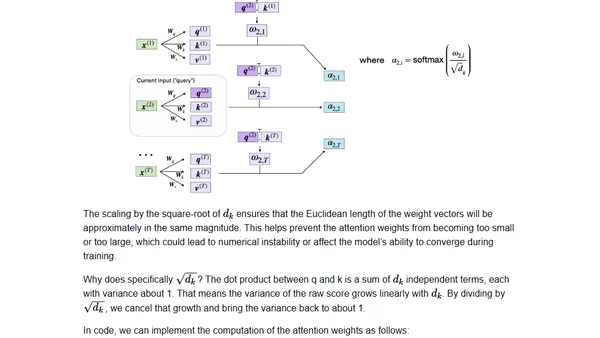

A technical guide to coding the self-attention mechanism from scratch, as used in transformers and large language models.

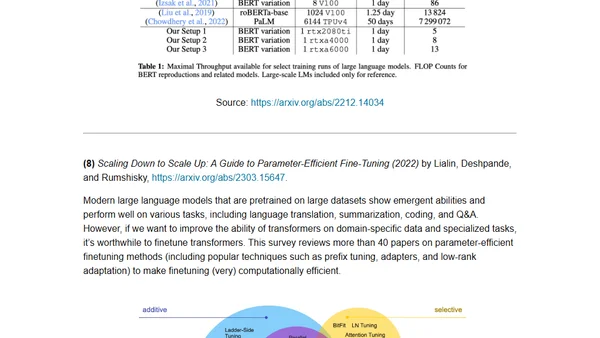

A curated reading list of key academic papers for understanding the development and architecture of large language models and transformers.

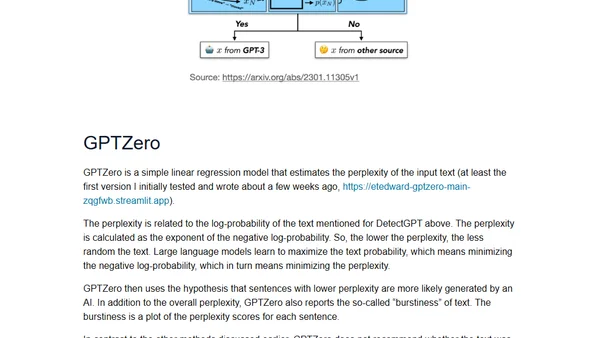

An overview of four different methods for detecting AI-generated text, including OpenAI's AI Classifier, DetectGPT, GPTZero, and watermarking.

A comparison of AutoAugment, RandAugment, AugMix, and TrivialAugment image augmentation methods in PyTorch for reducing overfitting.

Analyzes the limitations of AI chatbots like ChatGPT in providing accurate technical answers and discusses the need for curated data and human experts.

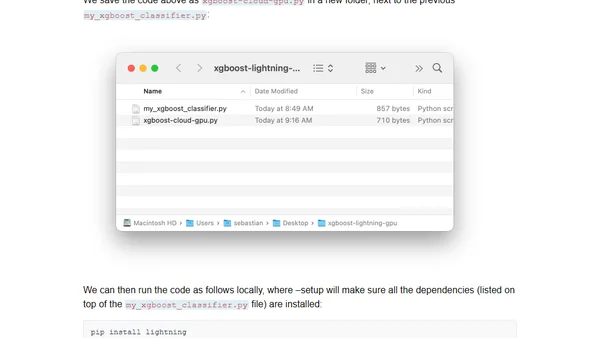

Learn how to train an XGBoost classifier using cloud GPUs without managing infrastructure via the Lightning AI framework.

A curated list of the top 10 open-source machine learning and AI projects released or updated in 2022, including PyTorch 2.0 and scikit-learn 1.2.

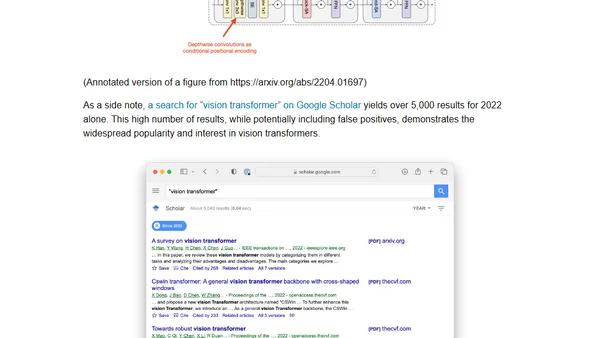

A review of the top 10 most influential machine learning papers from 2022, including ConvNeXt and MaxViT, with technical analysis.

Author announces the launch of 'Ahead of AI', a monthly newsletter covering AI trends, educational content, and personal updates on machine learning projects.

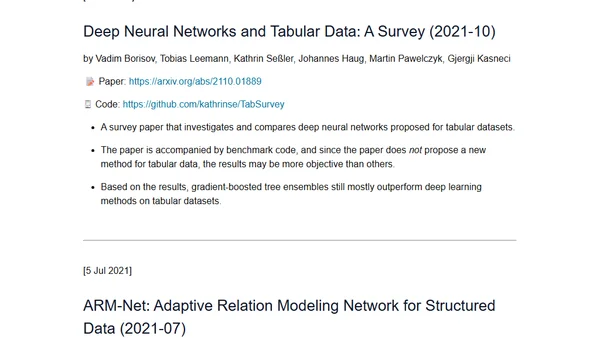

A curated list and summary of recent research papers exploring deep learning methods specifically designed for tabular data.

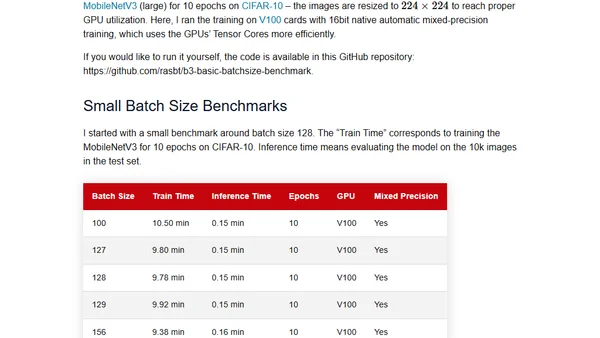

Challenges the common practice of using powers of 2 for neural network batch sizes, examining the theory and practical benchmarks.

Learn how to deploy a deep learning research demo on the cloud using the Lightning framework, including GPU training and model sharing.

Learn to build a Super Resolution GAN demo using the Lightning framework in this first part of a deep learning tutorial series.

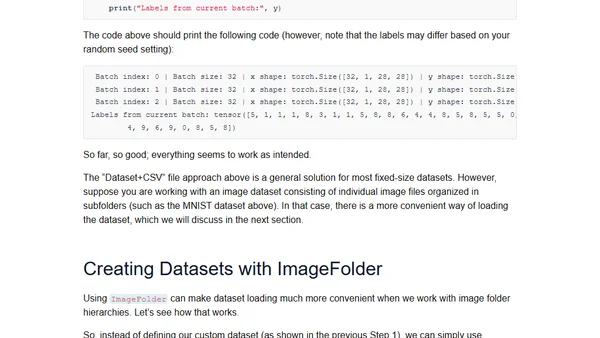

A hands-on exploration of PyTorch's new DataPipes for efficient data loading, comparing them to traditional Datasets and DataLoaders.

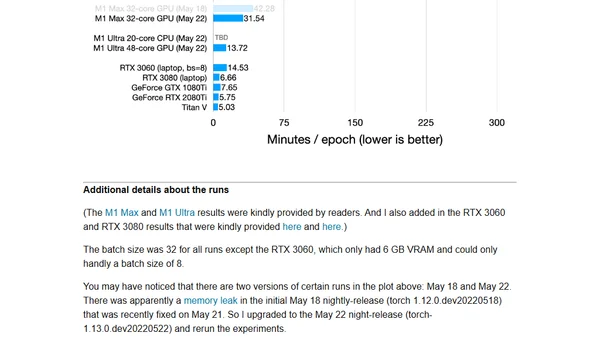

A hands-on review of PyTorch's new M1 GPU support, including installation steps and performance benchmarks for deep learning tasks.

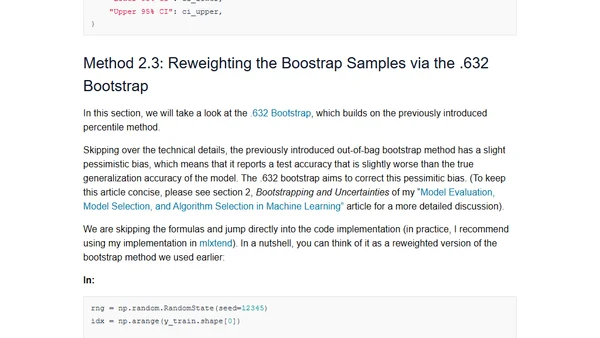

A guide to creating confidence intervals for evaluating machine learning models, covering multiple methods to quantify performance uncertainty.