No, We Don't Have to Choose Batch Sizes As Powers Of 2

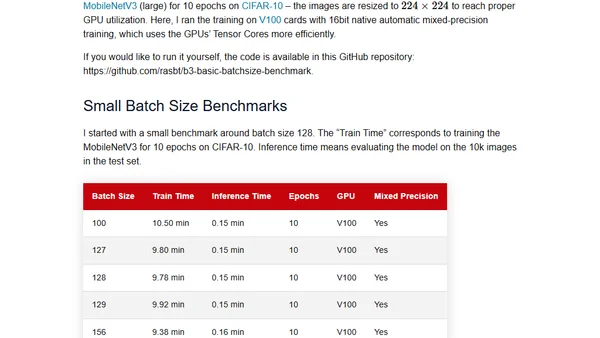

Read OriginalThis article critically examines the long-held convention of using batch sizes that are powers of 2 for neural network training. It explores the theoretical justifications, such as memory alignment and GPU efficiency with Tensor Cores, and questions whether these benefits hold up in practical, real-world scenarios.

Comments

No comments yet

Be the first to share your thoughts!

Browser Extension

Get instant access to AllDevBlogs from your browser

Top of the Week

1

The Beautiful Web

Jens Oliver Meiert

•

2 votes

2

Container queries are rad AF!

Chris Ferdinandi

•

2 votes

3

Wagon’s algorithm in Python

John D. Cook

•

1 votes

4

An example conversation with Claude Code

Dumm Zeuch

•

1 votes