MicroGrad.jl: Part 5 MLP

Part 5 of a series on building an automatic differentiation package in Julia, demonstrating its use to create and train a multi-layer perceptron on the moons dataset.

Part 5 of a series on building an automatic differentiation package in Julia, demonstrating its use to create and train a multi-layer perceptron on the moons dataset.

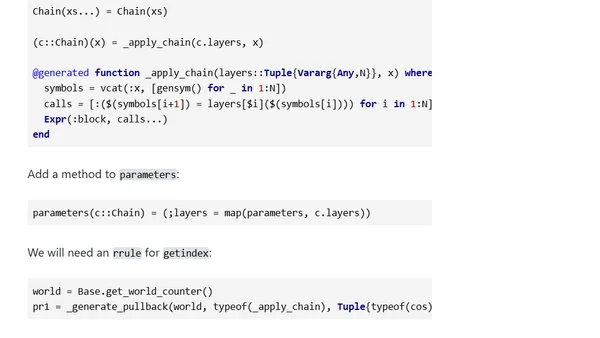

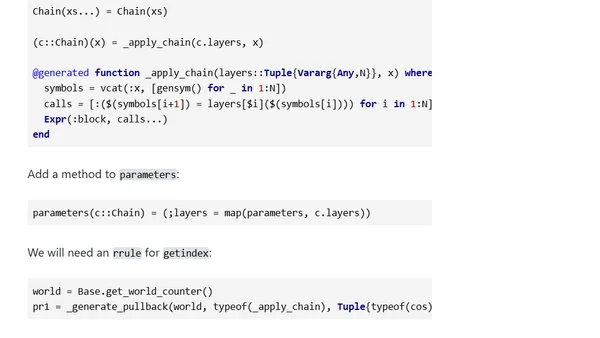

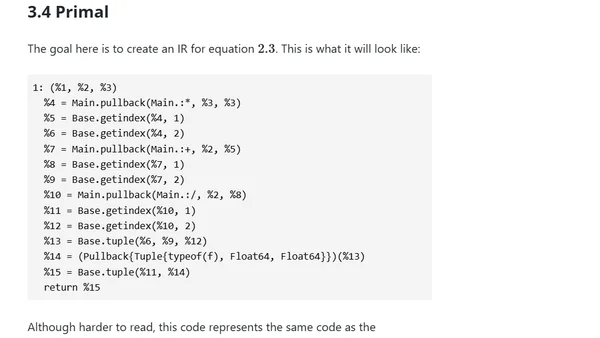

Extends a Julia automatic differentiation library (MicroGrad.jl) to handle map, getfield, and anonymous functions, enabling gradient descent for polynomial fitting.

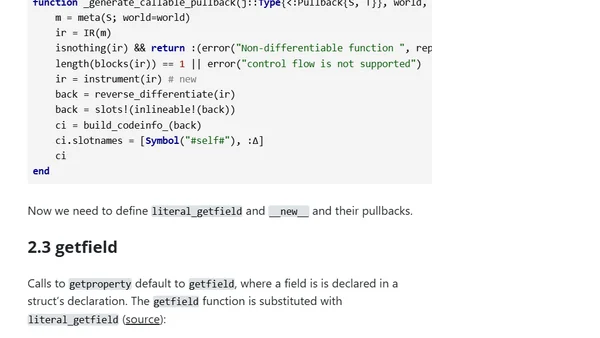

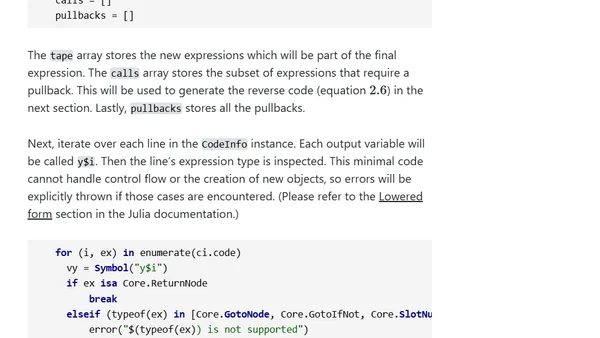

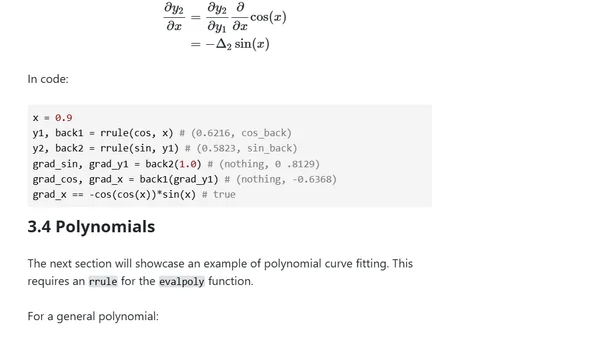

Explores using IRTools.jl for robust automatic differentiation in Julia, focusing on metaprogramming to generate forward and backward passes.

Explores automating automatic differentiation in Julia using metaprogramming and expression-based approaches to generate forward and backward passes.

An introduction to building a minimal automatic differentiation package in Julia, focusing on explicit chain rules and the Julia AD ecosystem.