You Gotta Push If You Wanna Pull

Explores the shift from traditional pull queries to using materialized views and data duplication for better performance, format, and location in data systems.

Explores the shift from traditional pull queries to using materialized views and data duplication for better performance, format, and location in data systems.

Explores implementing a data mesh architecture using dbt, outlining how dbt Mesh projects can align with data mesh principles for large-scale organizations.

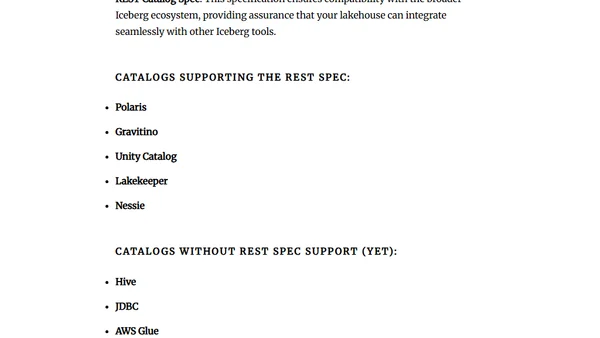

A comprehensive guide to the data lakehouse architecture, its core components (Iceberg, Delta, Hudi, Paimon), and the surrounding ecosystem for modern data platforms.

An introduction to data warehousing concepts, covering architecture, components, and performance optimization for analytical workloads.

Explains data lakes, their key characteristics, and how they differ from data warehouses in modern data architecture.

Explores core principles of scalable data engineering, including parallelism, minimizing data movement, and designing adaptable pipelines for growing data volumes.

Explores the modern data stack, cloud platforms, and principles for building flexible, cloud-native data engineering architectures.

Explains the data lakehouse architecture, a unified approach combining data lake scalability with warehouse management features like ACID transactions.

Explores reimagining Apache Kafka for the cloud, proposing a diskless, partition-free design with key-centric streams and topic hierarchies.

Explores reimagining Apache Kafka as a cloud-native event log, proposing features like partitionless design, key-centric access, and topic hierarchies.

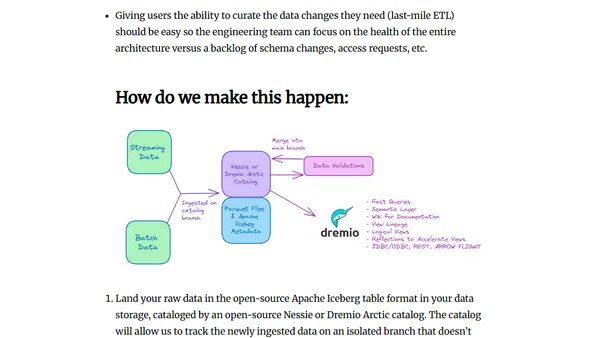

A technical guide on designing and implementing a modern data lakehouse architecture using the Apache Iceberg table format in 2025.

Explores how combining data lakehouse, virtualization, and mesh architectures with Dremio solves modern data scaling and silo challenges.

Explains why data professionals should adopt Dremio and Apache Iceberg for flexible, high-performance data lakehouse architecture.

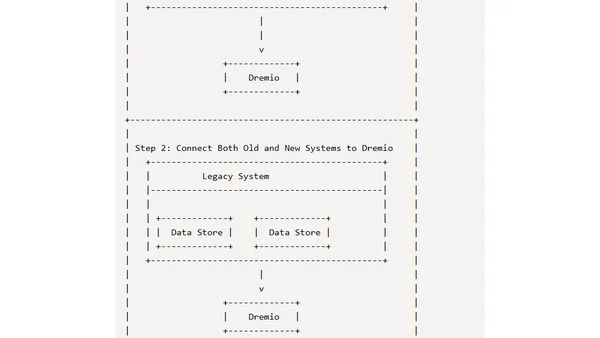

An introduction to data lakehouses, explaining what they are, why they're used, and how to migrate to this modern data architecture.

Explains how ontologies structure data for better interoperability, integration, and analysis across domains like healthcare and finance.

A guide to building a cost-effective, high-performance, and self-service data lakehouse architecture, addressing common pitfalls and outlining key principles.

Explains the data lakehouse concept, Dremio's role as a platform, and Apache Iceberg's function as a table format for modern data architectures.