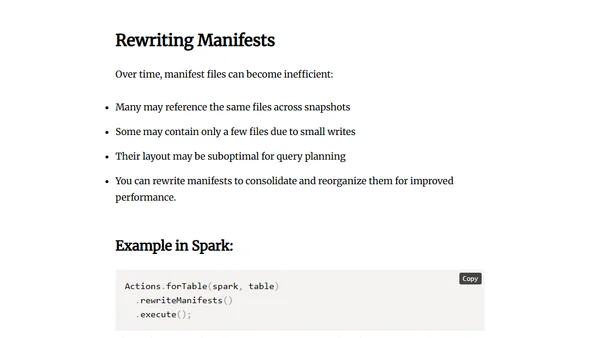

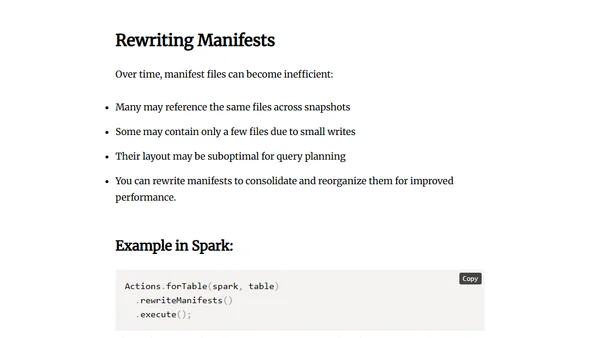

Avoiding Metadata Bloat with Snapshot Expiration and Rewriting Manifests

Explains how to manage Apache Iceberg table metadata by expiring old snapshots and rewriting manifests to prevent performance and cost issues.

Explains how to manage Apache Iceberg table metadata by expiring old snapshots and rewriting manifests to prevent performance and cost issues.

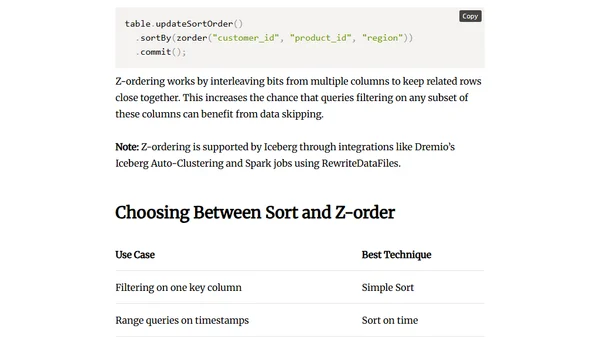

Explains how to use sorting and Z-order clustering in Apache Iceberg tables to optimize query performance and data layout.

Explains techniques for incremental, non-disruptive compaction in Apache Iceberg tables under continuous streaming data ingestion.

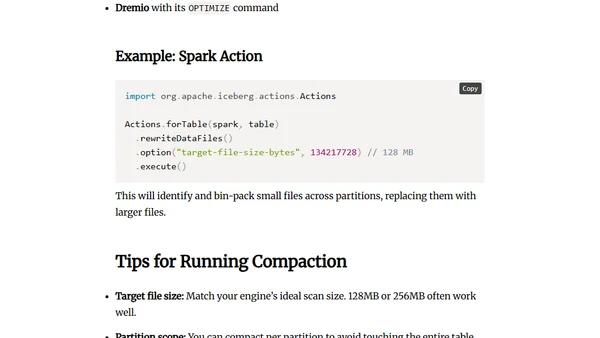

Explains data compaction using bin packing in Apache Iceberg to merge small files, improve query performance, and reduce metadata overhead.

A monthly roundup of data engineering links covering Apache Iceberg, Kafka, Debezium, Spark, and lakehouse architecture.

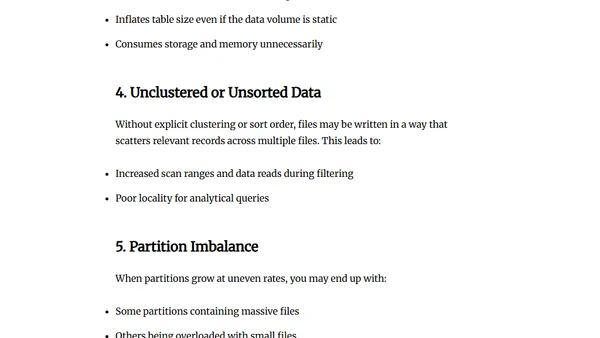

Explains how Apache Iceberg tables degrade without optimization, covering small files, fragmented manifests, and performance impacts.

Explains the importance of table maintenance in Apache Iceberg for data lakehouses, covering metadata and file management.

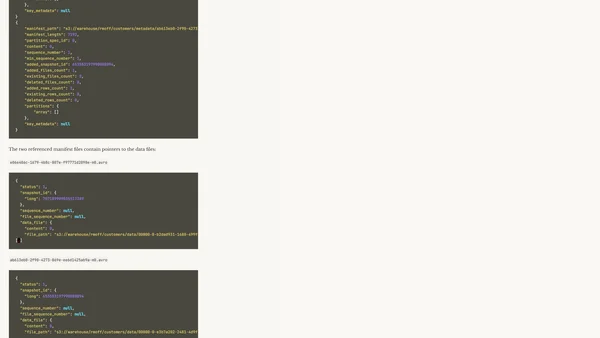

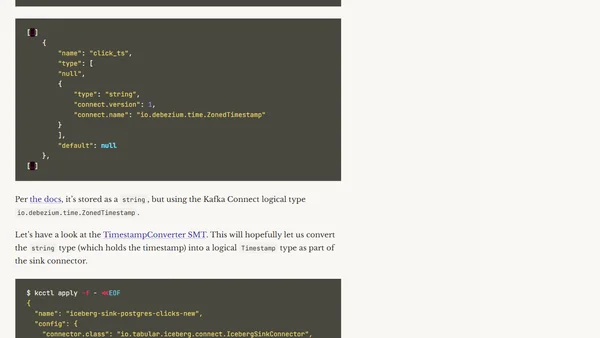

A technical guide on using Kafka Connect to write data from Kafka topics to Apache Iceberg tables stored on AWS S3, using the Glue Data Catalog.

A guide on how to find, join, and organize community meetups focused on Apache Iceberg and modern data lakehouse architectures.

A monthly roundup of tech links covering data lakehouses (DuckLake, Iceberg), Kafka, event streaming, and stream processing developments.

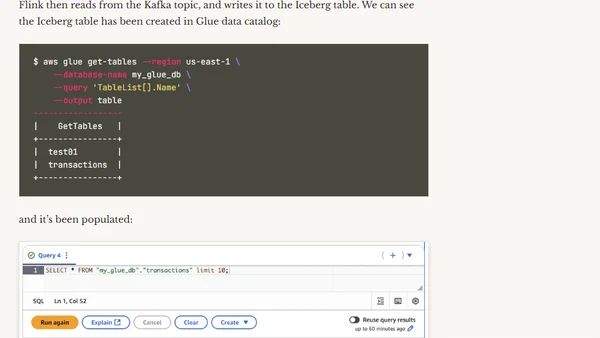

A technical guide on using Flink SQL to write data to Apache Iceberg tables stored on AWS S3, with metadata managed by the AWS Glue Data Catalog.

A monthly roundup of curated links and articles covering data engineering, Kafka, stream processing, and AI, with top picks highlighted.

Explains batch processing fundamentals for data engineering, covering concepts, tools, and its ongoing relevance in data workflows.

An introduction to data warehousing concepts, covering architecture, components, and performance optimization for analytical workloads.

An introductory guide to data engineering, explaining its role, key concepts, and how it differs from data science in the modern data ecosystem.

Explores the importance of data quality and validation in data engineering, covering key dimensions and tools for reliable pipelines.

Explains core data engineering concepts: metadata, data lineage, and governance, and their importance for scalable, compliant data systems.

Explains the importance of data storage formats and compression for performance and cost in large-scale data engineering systems.

Explores core principles of scalable data engineering, including parallelism, minimizing data movement, and designing adaptable pipelines for growing data volumes.

Explains data lakes, their key characteristics, and how they differ from data warehouses in modern data architecture.