Finetuning Large Language Models On A Single GPU Using Gradient Accumulation

A guide to finetuning large language models like BLOOM on a single GPU using gradient accumulation to overcome memory limits.

Sebastian Raschka, PhD, is an LLM Research Engineer and AI expert bridging academia and industry, specializing in large language models, high-performance AI systems, and practical, code-driven machine learning.

97 articles from this blog

A guide to finetuning large language models like BLOOM on a single GPU using gradient accumulation to overcome memory limits.

A guide on managing the overwhelming volume of AI/ML research, sharing strategies and tools for prioritizing and staying updated effectively.

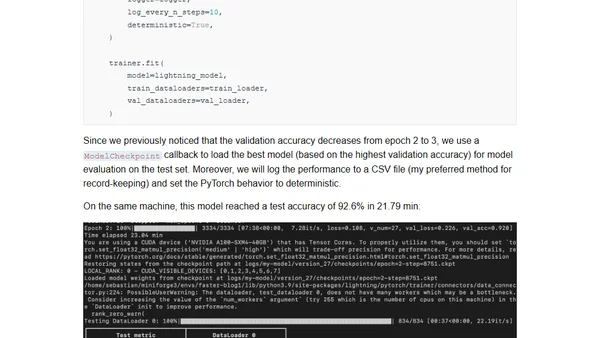

Techniques to accelerate PyTorch model training by 8x using PyTorch Lightning, with a DistilBERT fine-tuning example.

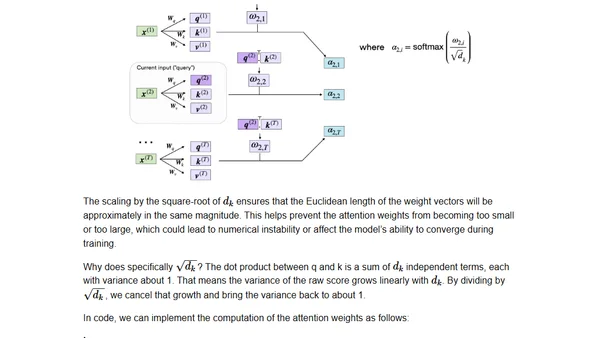

A technical guide to coding the self-attention mechanism from scratch, as used in transformers and large language models.

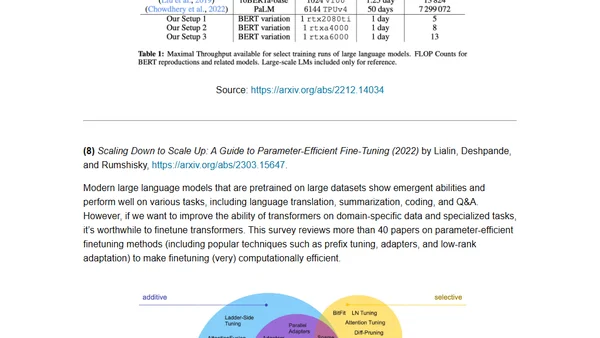

A curated reading list of key academic papers for understanding the development and architecture of large language models and transformers.

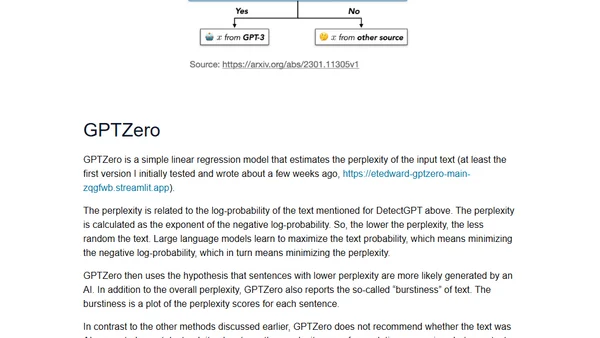

Explains four methods for detecting AI-generated text, including OpenAI's AI Classifier, DetectGPT, GPTZero, and watermarking, and how they work.

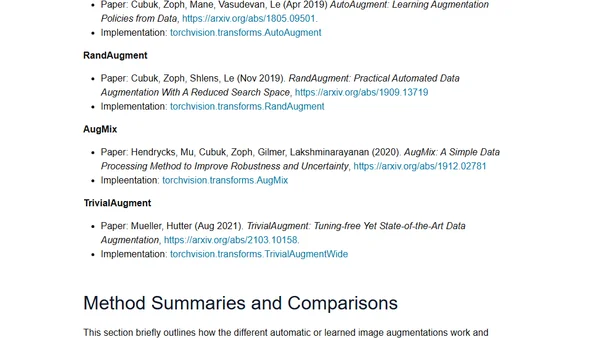

A comparison of four automatic image augmentation methods (AutoAugment, RandAugment, AugMix, TrivialAugment) in PyTorch for reducing overfitting in deep learning.

Discusses the limitations of AI chatbots like ChatGPT in providing accurate technical answers and proposes curated resources and expert knowledge as future solutions.

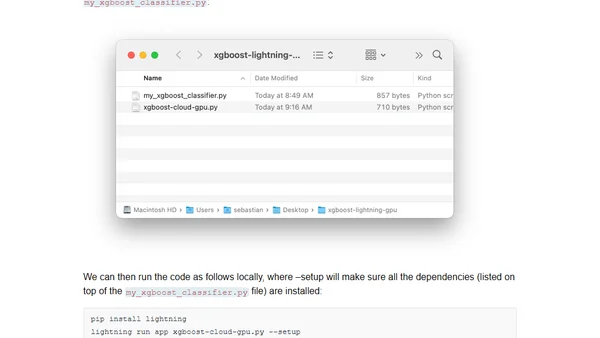

A guide to training XGBoost models on cloud GPUs using the Lightning AI framework, bypassing complex infrastructure setup.

A curated list of the top 10 open-source releases in Machine Learning & AI for 2022, including PyTorch 2.0 and scikit-learn 1.2.

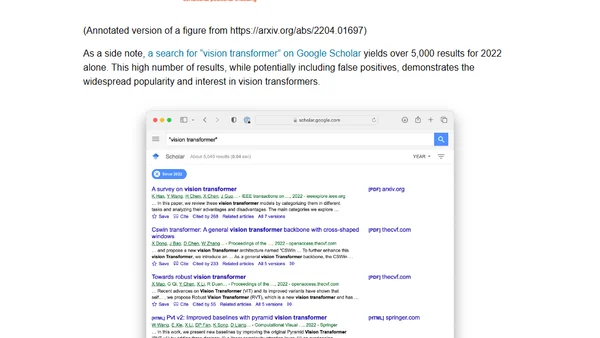

A review of the top 10 influential machine learning research papers from 2022, including ConvNeXt and MaxViT, highlighting key advancements in AI.

Author announces a new monthly AI newsletter, 'Ahead Of AI,' and shares updates on a passion project and conference appearances.

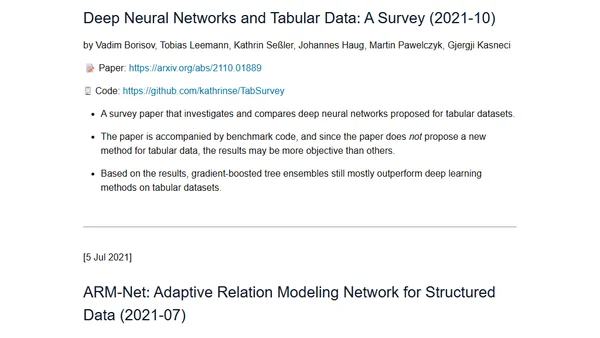

A curated list and summary of recent research papers exploring deep learning methods specifically designed for tabular data.

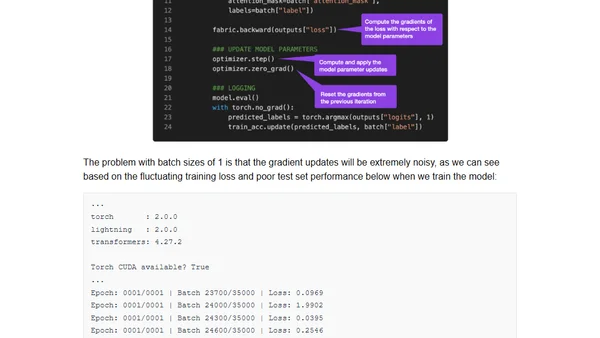

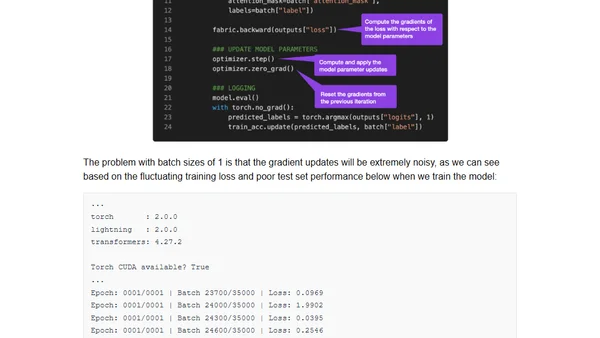

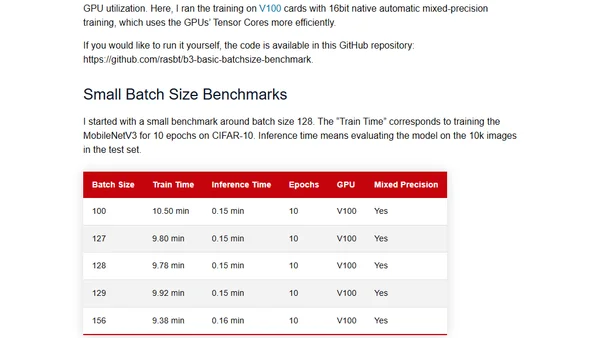

Examines the common practice of using powers of 2 for neural network batch sizes, questioning its necessity with practical and theoretical insights.

A guide to deploying and sharing deep learning research demos on the cloud using the Lightning framework, including model training.

A tutorial on building a Super Resolution GAN demo app using the Lightning framework to share deep learning research models.

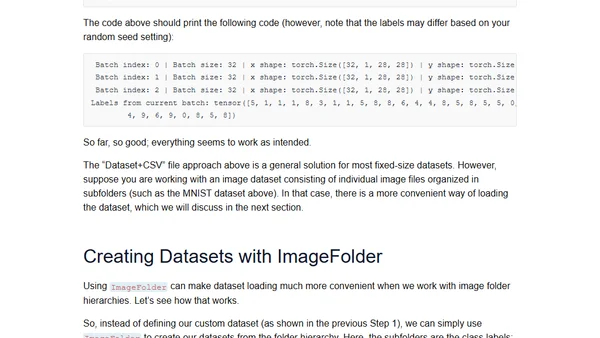

A hands-on exploration of PyTorch's new DataPipes for efficient data loading, comparing them to traditional Datasets and DataLoaders.

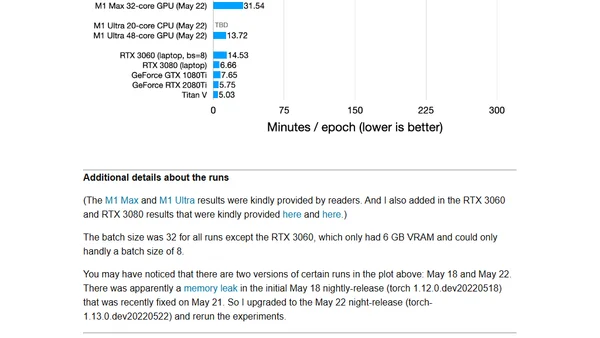

A hands-on review and benchmark of PyTorch's new official GPU support for Apple's M1 chips, covering installation and performance.

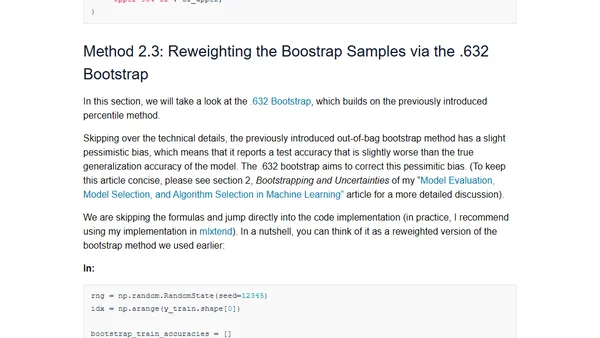

A technical guide explaining methods for creating confidence intervals to measure uncertainty in machine learning model performance.

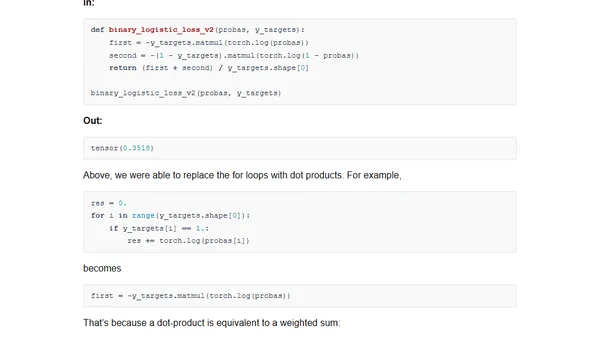

Explains cross-entropy loss in PyTorch for binary and multiclass classification, highlighting common implementation pitfalls and best practices.