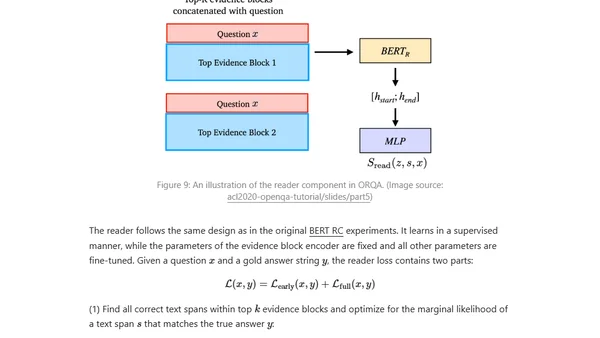

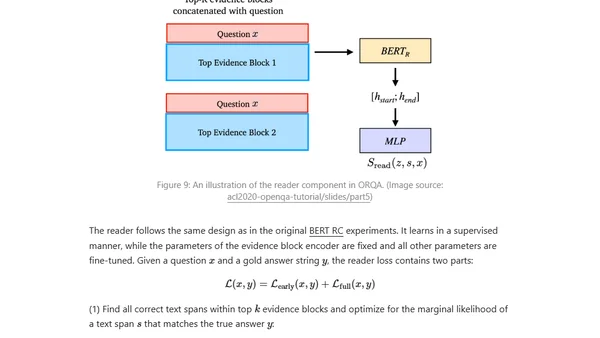

How to Build an Open-Domain Question Answering System?

A technical overview of approaches for building open-domain question answering systems using pretrained language models and neural networks.

Lilian Weng is a machine learning researcher documenting deep, well-researched learning notes on large language models, reinforcement learning, and generative AI. Her blog offers clear, structured insights into model reasoning, alignment, hallucinations, and modern ML systems.

50 articles from this blog

A technical overview of approaches for building open-domain question answering systems using pretrained language models and neural networks.

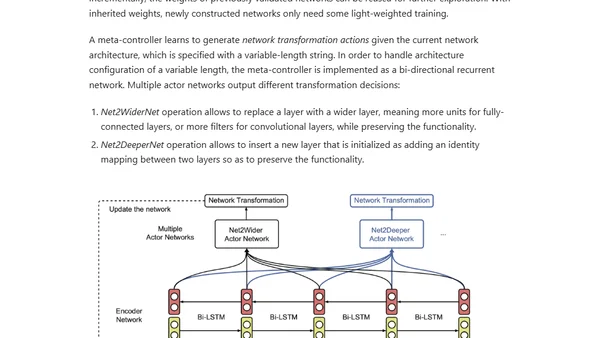

An overview of Neural Architecture Search (NAS), covering its core components: search space, algorithms, and evaluation strategies for automating AI model design.

An overview of key exploration strategies in Deep Reinforcement Learning, including classic methods and modern approaches for tackling hard-exploration problems.

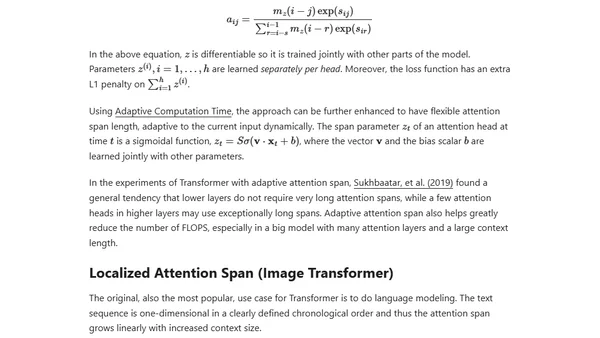

An updated overview of the Transformer model family, covering improvements for longer attention spans, efficiency, and new architectures since 2020.

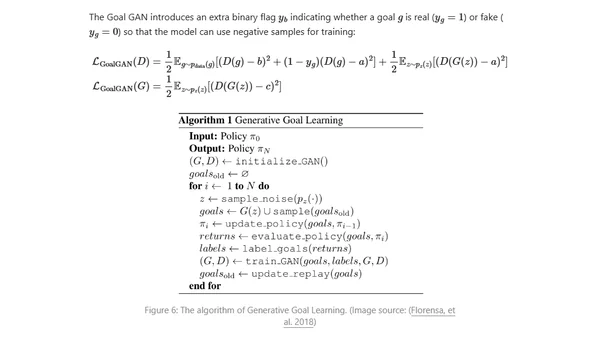

Explores curriculum learning strategies for training reinforcement learning models more efficiently, from simple to complex tasks.

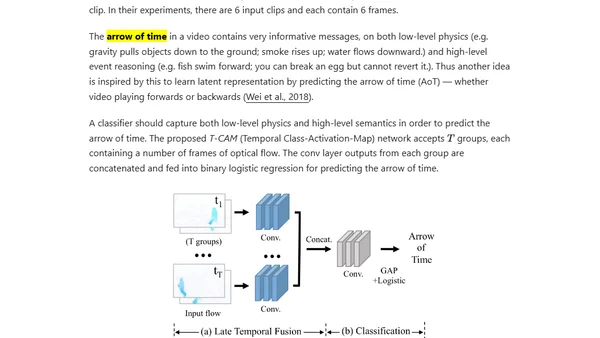

Explores self-supervised learning, a method to train models on unlabeled data by creating supervised tasks, covering key concepts and models.

An introduction to Evolution Strategies (ES) as a black-box optimization alternative to gradient descent, with applications in deep reinforcement learning.

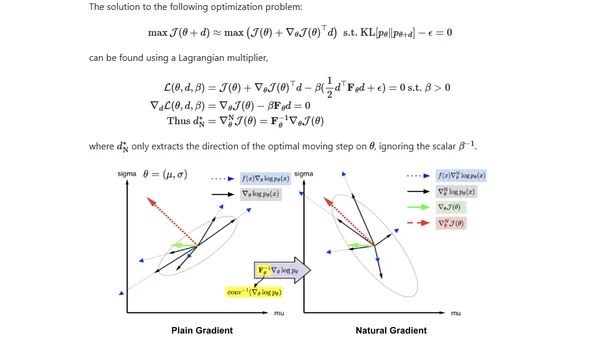

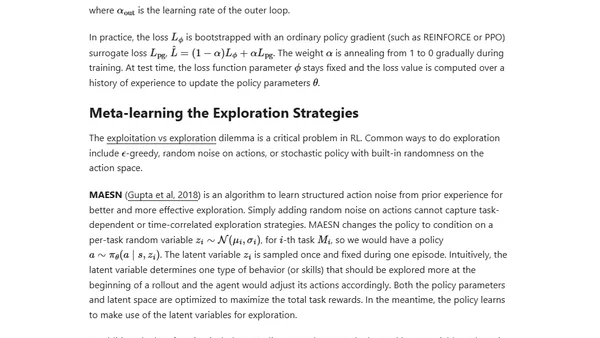

Explores meta reinforcement learning, where agents learn to adapt quickly to new, unseen RL tasks, aiming for general-purpose problem-solving algorithms.

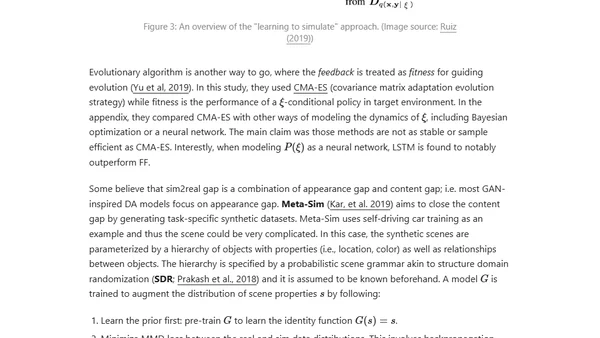

Explores domain randomization as a technique to bridge the simulation-to-reality gap in robotics and deep reinforcement learning.

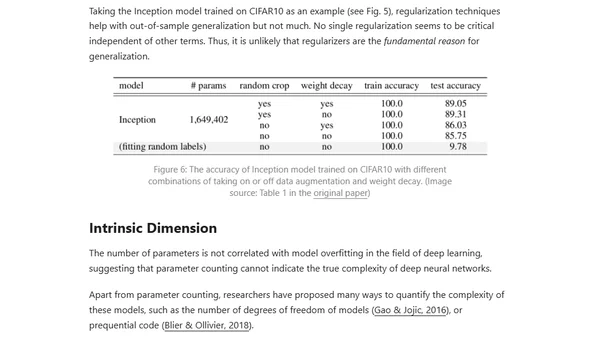

Explores the paradox of why deep neural networks generalize well despite having many parameters, discussing theories like Occam's Razor and the Lottery Ticket Hypothesis.

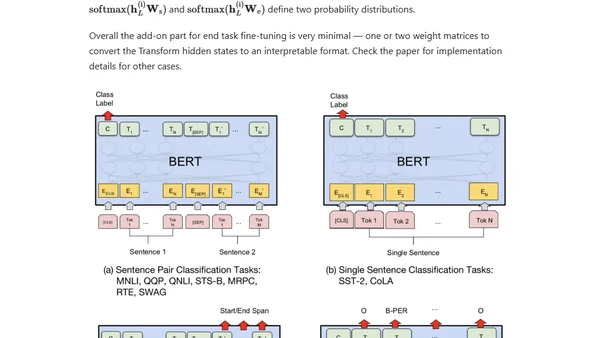

A technical overview of the evolution of large-scale pre-trained language models like BERT, GPT, and T5, focusing on contextual embeddings and transfer learning in NLP.

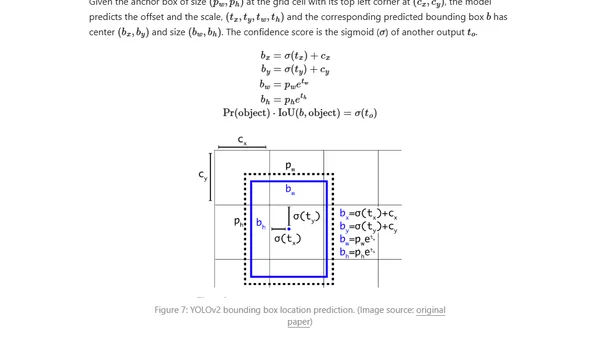

Explores fast, one-stage object detection models like YOLO, SSD, and RetinaNet, comparing them to slower two-stage R-CNN models.

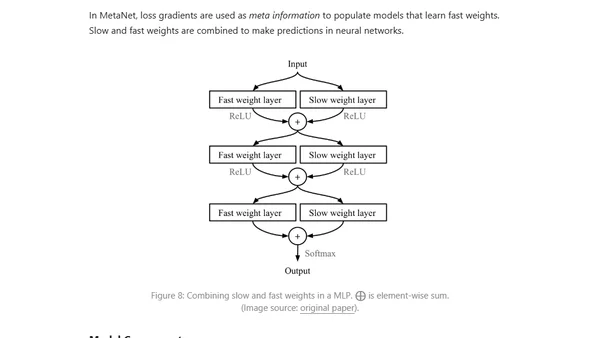

An introduction to meta-learning, a machine learning approach where models learn to adapt quickly to new tasks with minimal data, like 'learning to learn'.

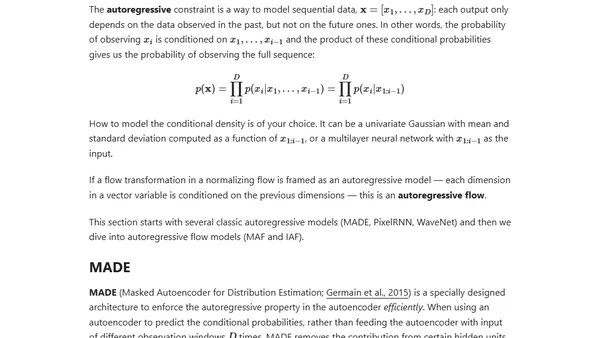

An introduction to flow-based deep generative models, explaining how they explicitly learn data distributions using normalizing flows, compared to GANs and VAEs.

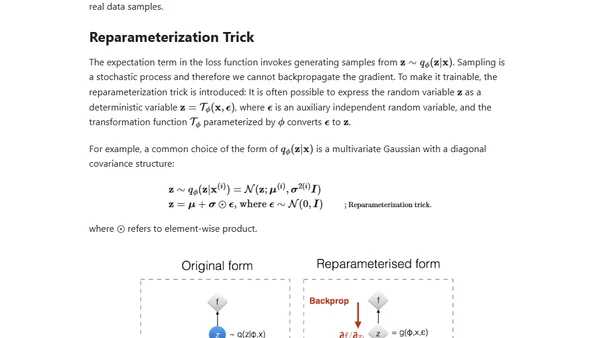

Explores the evolution from basic Autoencoders to Beta-VAE, covering their architecture, mathematical notation, and applications in dimensionality reduction.

Explains the attention mechanism in deep learning, its motivation from human perception, and its role in improving seq2seq models like Transformers.

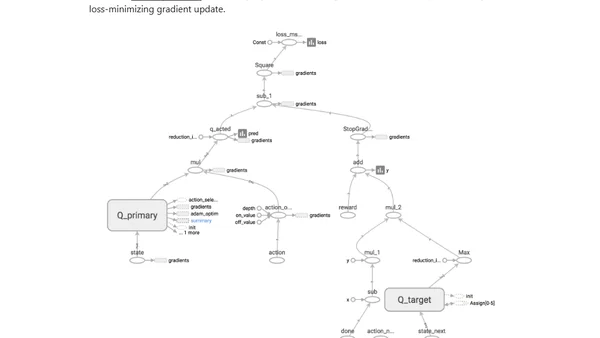

A hands-on tutorial on implementing deep reinforcement learning models using TensorFlow and the OpenAI Gym environment.

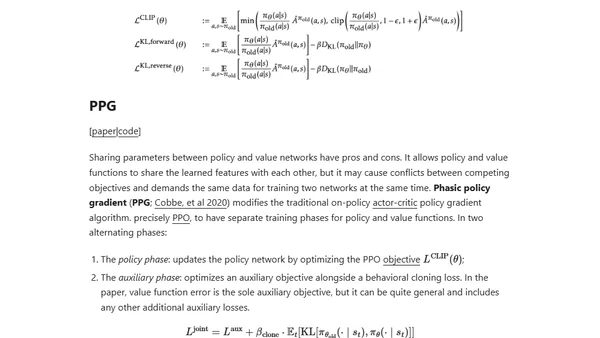

A comprehensive overview of policy gradient algorithms in reinforcement learning, covering key concepts, notations, and various methods.

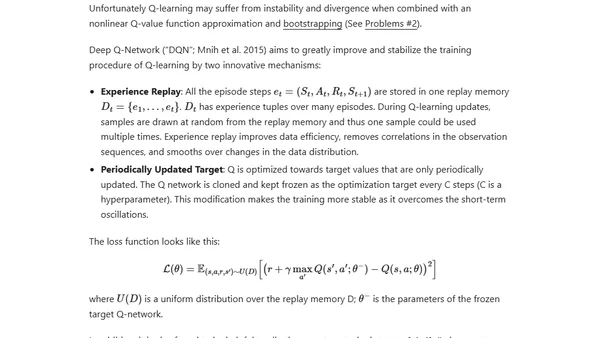

An introductory guide to Reinforcement Learning (RL), covering key concepts, algorithms like SARSA and Q-learning, and its role in AI breakthroughs.

Explores the Multi-Armed Bandit problem, a classic dilemma balancing exploration and exploitation in decision-making algorithms.