Object Detection for Dummies Part 3: R-CNN Family

Explores the R-CNN family of models for object detection, covering R-CNN, Fast R-CNN, Faster R-CNN, and Mask R-CNN with technical details.

Lilian Weng is a machine learning researcher documenting deep, well-researched learning notes on large language models, reinforcement learning, and generative AI. Her blog offers clear, structured insights into model reasoning, alignment, hallucinations, and modern ML systems.

50 articles from this blog

Explores the R-CNN family of models for object detection, covering R-CNN, Fast R-CNN, Faster R-CNN, and Mask R-CNN with technical details.

Explores classic CNN architectures for image classification, including AlexNet, VGG, and ResNet, as foundational models for object detection.

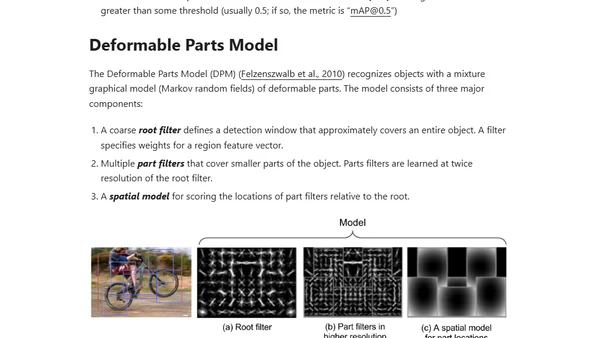

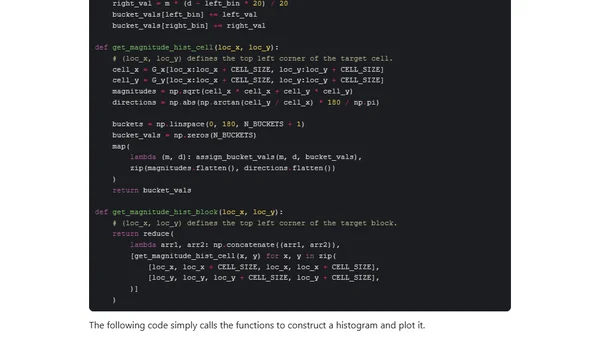

An introductory guide to the fundamental concepts of object detection, covering image gradients, HOG, and segmentation, as a precursor to deep learning methods.

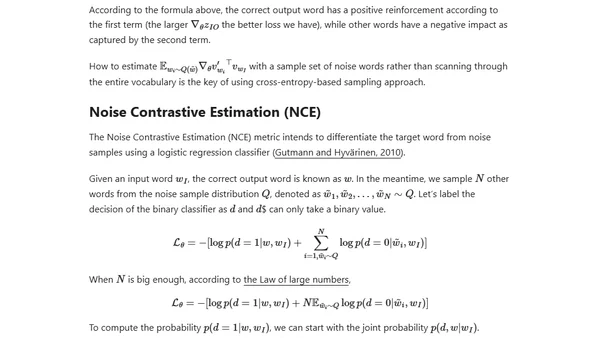

Explains word embeddings, comparing count-based and context-based methods like skip-gram for converting words into dense numeric vectors.

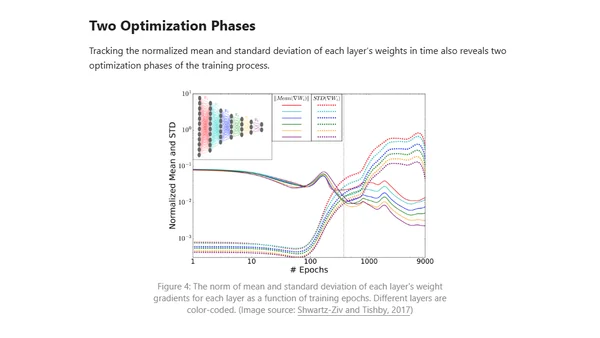

Explores applying information theory, specifically the Information Bottleneck method, to analyze training phases and learning bounds in deep neural networks.

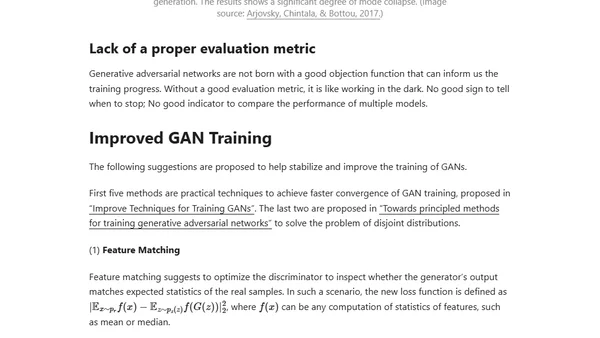

Explains the math behind GANs, their training challenges, and introduces WGAN as a solution for improved stability.

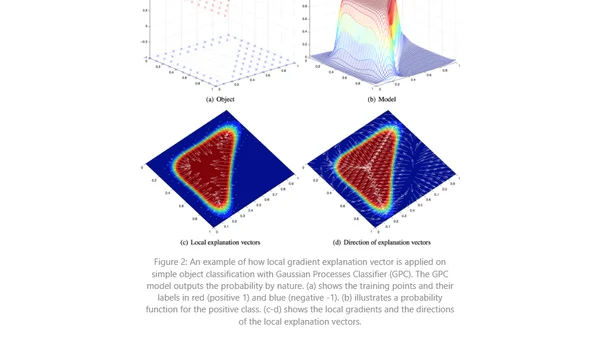

Explores the importance of interpreting ML model predictions, especially in regulated fields, and reviews methods like linear regression and interpretable models.

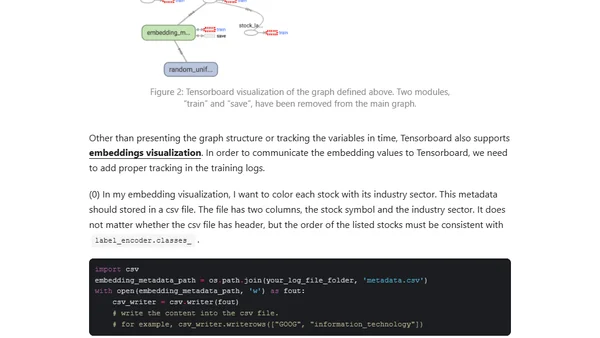

Part 2 of a tutorial on using RNNs with stock symbol embeddings to predict prices for multiple stocks.

A tutorial on building a Recurrent Neural Network (RNN) with LSTM cells in TensorFlow to predict S&P 500 stock prices.

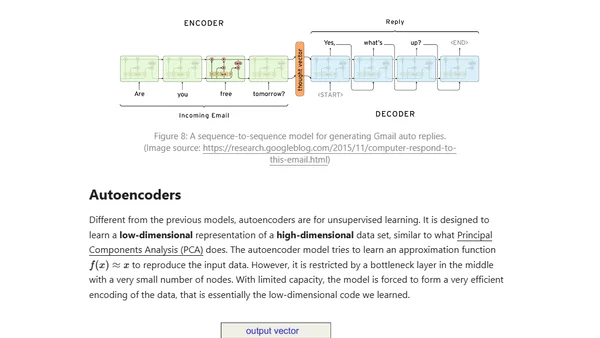

An introduction to deep learning, explaining its rise, key concepts like CNNs, and why it's powerful now due to data and computing advances.