4/1/2021

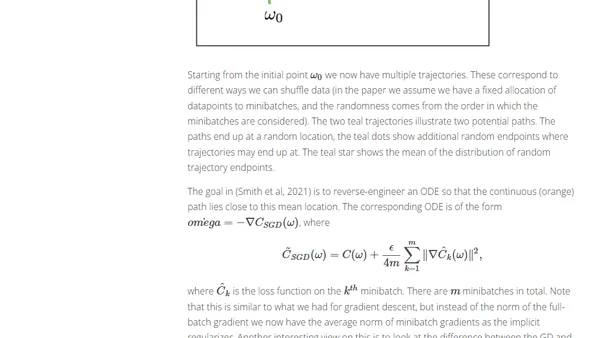

•

EN

Notes on the Origin of Implicit Regularization in SGD

Explores how Stochastic Gradient Descent (SGD) inherently prefers certain minima, leading to better generalization in deep learning, beyond classical theory.