Poisoning Well

Explores the ethics of LLM training data and proposes a technical method to poison AI crawlers using nofollow links.

Explores the ethics of LLM training data and proposes a technical method to poison AI crawlers using nofollow links.

A developer's frustration with aggressive LLM crawlers causing outages and consuming resources, detailing past abuse like crypto mining and Go module mirror issues.

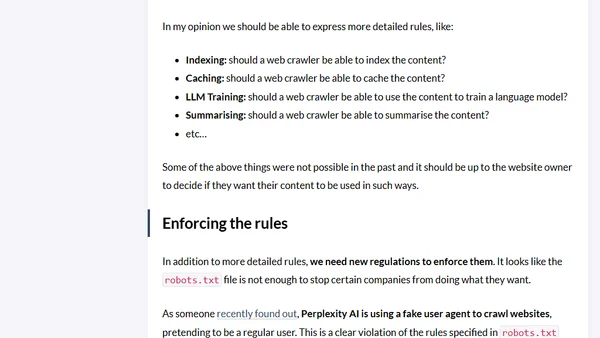

Argues for an evolved robots.txt standard with AI-specific rules and regulations to enforce them, citing Perplexity AI's violations.

A guide to building a polite web crawler that respects robots.txt, manages crawl frequency, and avoids overloading servers, with an example in .NET.

How to use the handroll static site generator to create sitemaps and robots.txt files for better search engine visibility.

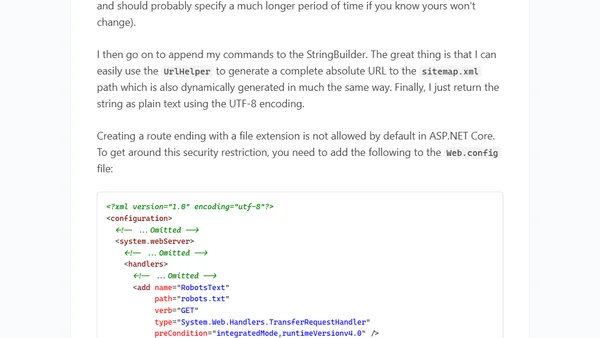

A guide to dynamically generating a robots.txt file in ASP.NET MVC, including SEO benefits and code examples.

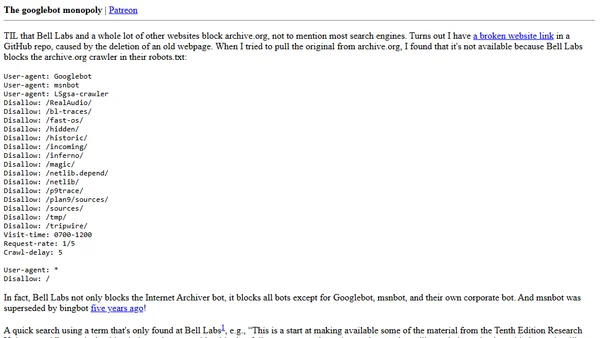

Explores how websites blocking non-Google crawlers in robots.txt files can harm web archives and create a search engine monopoly.

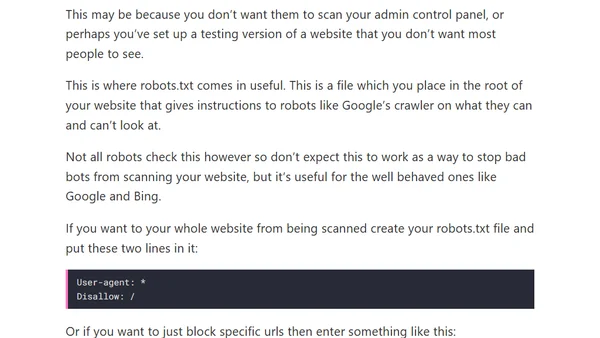

A guide on using the robots.txt file to control search engine access to specific parts or the entirety of a website.