MicroGrad.jl: Part 5 MLP

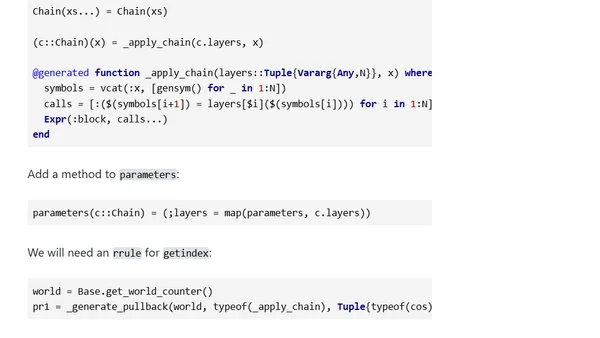

Read OriginalThis article is the fifth part of a series on implementing automatic differentiation in Julia. It demonstrates how the MicroGrad.jl package can serve as the backbone for a machine learning framework, similar to Flux.jl. The tutorial walks through creating a multi-layer perceptron (MLP), implementing layers like ReLU and Dense, and training the network on the non-linear moons dataset for classification.

0 comments

Comments

No comments yet

Be the first to share your thoughts!

Browser Extension

Get instant access to AllDevBlogs from your browser

Top of the Week

1

2

Better react-hook-form Smart Form Components

Maarten Hus

•

2 votes

3

AGI, ASI, A*I – Do we have all we need to get there?

John D. Cook

•

1 votes

4

Quoting Thariq Shihipar

Simon Willison

•

1 votes

5

Dew Drop – January 15, 2026 (#4583)

Alvin Ashcraft

•

1 votes

6

Using Browser Apis In React Practical Guide

Jivbcoop

•

1 votes