1/10/2023

•

EN

Large Transformer Model Inference Optimization

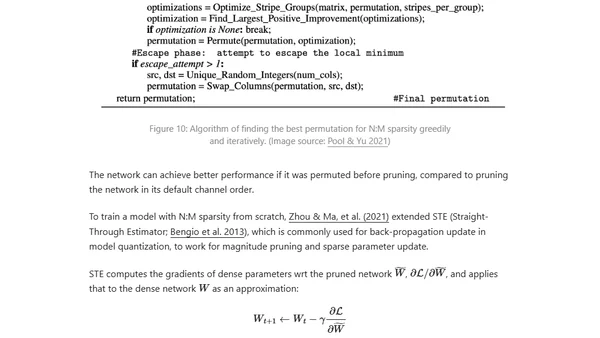

Explores techniques to optimize inference speed and memory usage for large transformer models, including distillation, pruning, and quantization.