Deep Dive into Dremio's File-based Auto Ingestion into Apache Iceberg Tables

A guide to setting up and using Dremio's Auto-Ingest feature for automated, event-driven data loading into Apache Iceberg tables from cloud storage.

Alex Merced — Developer and technical writer sharing in-depth insights on data engineering, Apache Iceberg, data lakehouse architectures, Python tooling, and modern analytics platforms, with a strong focus on practical, hands-on learning.

333 articles from this blog

A guide to setting up and using Dremio's Auto-Ingest feature for automated, event-driven data loading into Apache Iceberg tables from cloud storage.

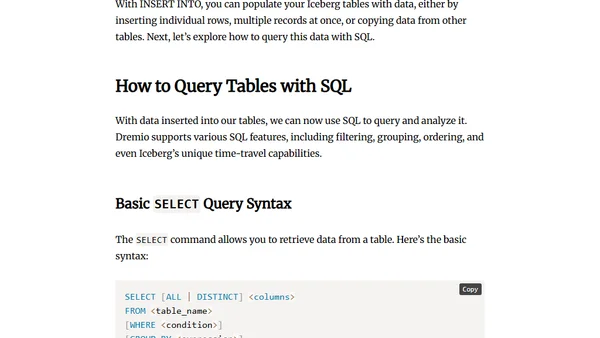

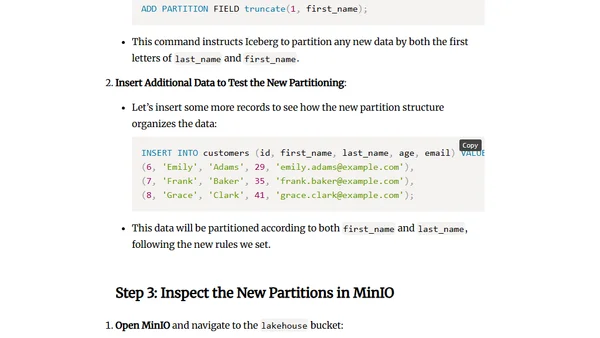

A tutorial on using SQL with Apache Iceberg tables in the Dremio data lakehouse platform, covering setup and core operations.

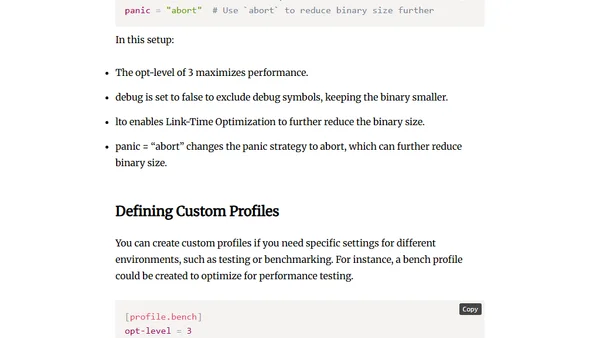

A guide to understanding and using the cargo.toml file, the central configuration file for managing Rust projects and dependencies with Cargo.

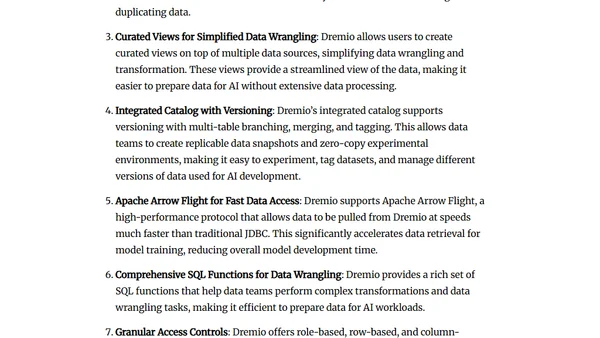

Explores how Dremio and Apache Iceberg create AI-ready data by ensuring accessibility, scalability, and governance for machine learning workloads.

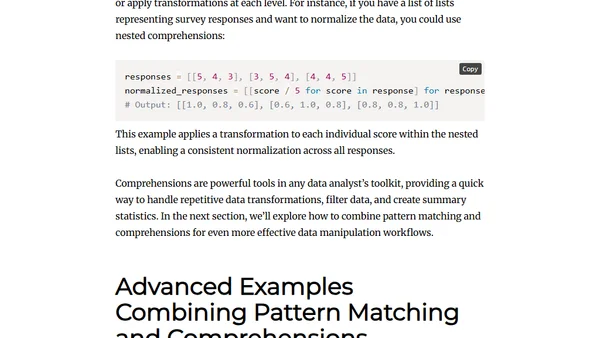

Explores using Python's pattern matching and comprehensions for efficient data cleaning, transformation, and analysis.

A hands-on tutorial for setting up a local data lakehouse with Apache Iceberg, Dremio, and Nessie using Docker in under 10 minutes.

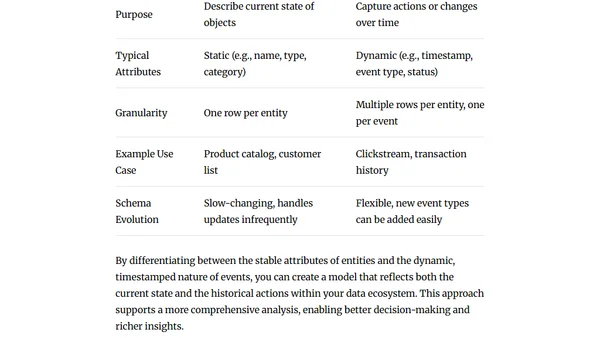

Explores the differences between event and entity data modeling, when to use each approach, and practical design considerations for structuring data effectively.

A practical guide to reading and writing Parquet files in Python using PyArrow and FastParquet libraries.

Final guide in a series covering performance tuning and best practices for optimizing Apache Parquet files in big data workflows.

Explores why Parquet is the ideal columnar file format for optimizing storage and query performance in modern data lake and lakehouse architectures.

Explores how metadata in Parquet files improves data efficiency and query performance, covering file, row group, and column-level metadata.

Explores compression algorithms in Parquet files, comparing Snappy, Gzip, Brotli, Zstandard, and LZO for storage and performance.

Explains encoding techniques in Parquet files, including dictionary, RLE, bit-packing, and delta encoding, to optimize storage and performance.

Explains how Parquet handles schema evolution, including adding/removing columns and changing data types, for data engineers.

Explains the hierarchical structure of Parquet files, detailing how pages, row groups, and columns optimize storage and query performance.

Explains Parquet's columnar storage model, detailing its efficiency for big data analytics through faster queries, better compression, and optimized aggregation.

An introduction to Apache Parquet, a columnar storage file format for efficient data processing and analytics.

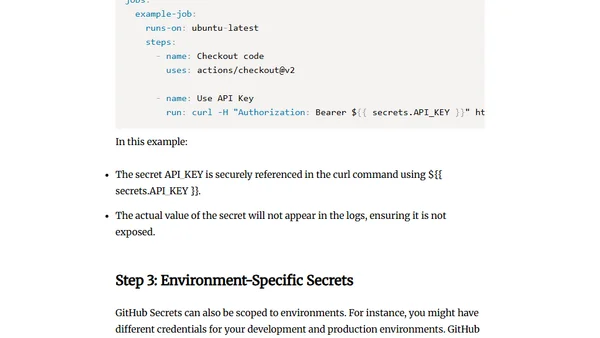

Explores using GitHub Actions for software development CI/CD and advanced data engineering tasks like ETL pipelines and data orchestration.

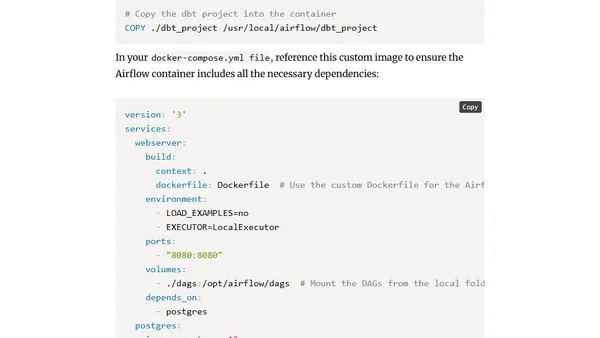

Using GitHub Actions to trigger Airflow DAGs for orchestrating data pipelines across Spark, Dremio, and Snowflake.

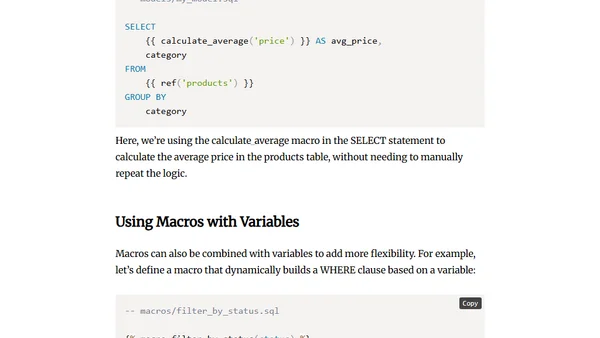

A guide explaining dbt macros, their purpose, benefits, and how to use them to write reusable, standardized SQL code in data transformation projects.