Gated Multimodal Units for Information Fusion

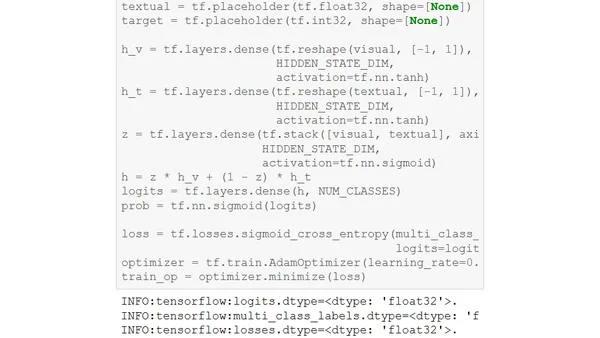

Read OriginalThis technical article details the Gated Multimodal Unit (GMU), a neural network component for multimodal information fusion. It explains the GMU's self-attention mechanism, which allows a model to dynamically weight input from different modalities (e.g., vision and text) based on their relevance. The post includes the model's equations and a practical implementation with a synthetic dataset to demonstrate how the GMU learns to ignore noisy input channels.

Comments

No comments yet

Be the first to share your thoughts!

Browser Extension

Get instant access to AllDevBlogs from your browser

Top of the Week

1

2

Better react-hook-form Smart Form Components

Maarten Hus

•

2 votes

3

AGI, ASI, A*I – Do we have all we need to get there?

John D. Cook

•

1 votes

4

Quoting Thariq Shihipar

Simon Willison

•

1 votes

5

Dew Drop – January 15, 2026 (#4583)

Alvin Ashcraft

•

1 votes

6

Using Browser Apis In React Practical Guide

Jivbcoop

•

1 votes