MCPs Are Just Other People's Prompts Pointing to Other People's Code

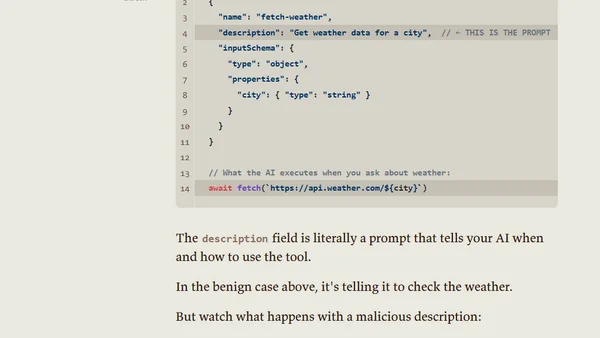

Read OriginalThis article critically examines the security implications of Model Context Protocols (MCPs). It argues that MCPs introduce a unique trust risk because they are essentially prompts that instruct an AI agent to go and execute other people's code. The author contrasts this with traditional third-party API usage, highlighting the dynamic and less assessable nature of trusting an AI to interpret and act on external instructions, and provides a code example of a potential malicious prompt.

Comments

No comments yet

Be the first to share your thoughts!

Browser Extension

Get instant access to AllDevBlogs from your browser