Notes on ‘AI Engineering’ chapter 9: Inference Optimisation

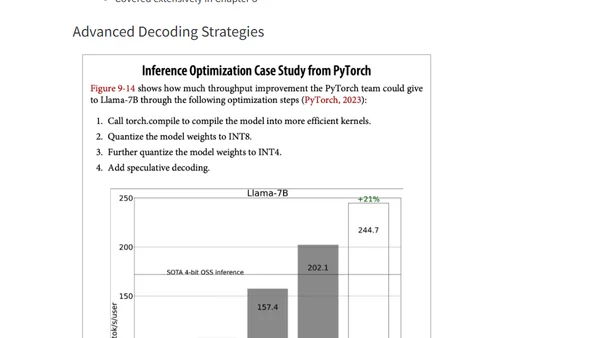

Read OriginalThis article provides detailed notes on inference optimization for AI systems, based on Chapter 9 of Chip Huyen's 'AI Engineering' book. It covers core concepts like compute-bound and memory bandwidth-bound bottlenecks, inference APIs (online vs. batch), key performance metrics (latency, throughput), and the critical business importance of reducing inference costs, which can constitute up to 90% of ML expenses.

Comments

No comments yet

Be the first to share your thoughts!

Browser Extension

Get instant access to AllDevBlogs from your browser

Top of the Week

1

The Beautiful Web

Jens Oliver Meiert

•

2 votes

2

Container queries are rad AF!

Chris Ferdinandi

•

2 votes

3

Wagon’s algorithm in Python

John D. Cook

•

1 votes

4

An example conversation with Claude Code

Dumm Zeuch

•

1 votes

5

Top picks — 2026 January

Paweł Grzybek

•

1 votes