Running Mistral 7B Instruct on a Macbook

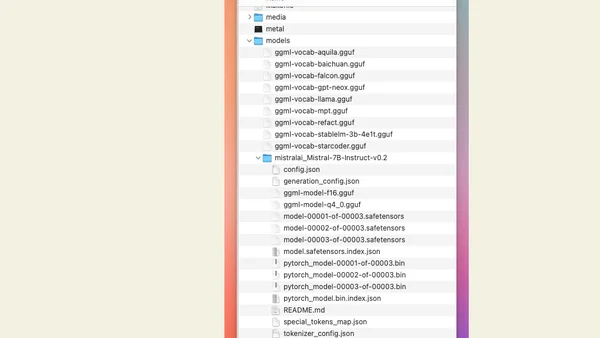

Read OriginalThis article provides a detailed, step-by-step tutorial for running the Mistral 7B Instruct v0.2 model on an Apple Silicon Macbook. It covers downloading the model via HuggingFace, using llama.cpp for conversion and quantization, and executing inference, noting practical performance of ~20 tokens/second on an M2 Mac. It also mentions the simpler alternative of using Ollama.

Comments

No comments yet

Be the first to share your thoughts!

Browser Extension

Get instant access to AllDevBlogs from your browser

Top of the Week

1

Quoting Thariq Shihipar

Simon Willison

•

2 votes

2

Using Browser Apis In React Practical Guide

Jivbcoop

•

2 votes

3

Top picks — 2026 January

Paweł Grzybek

•

1 votes

4

In Praise of –dry-run

Henrik Warne

•

1 votes

5

Deep Learning is Powerful Because It Makes Hard Things Easy - Reflections 10 Years On

Ferenc Huszár

•

1 votes

6

Vibe coding your first iOS app

William Denniss

•

1 votes

7

AGI, ASI, A*I – Do we have all we need to get there?

John D. Cook

•

1 votes

8

Dew Drop – January 15, 2026 (#4583)

Alvin Ashcraft

•

1 votes