Cachy: How we made our notebooks 60x faster.

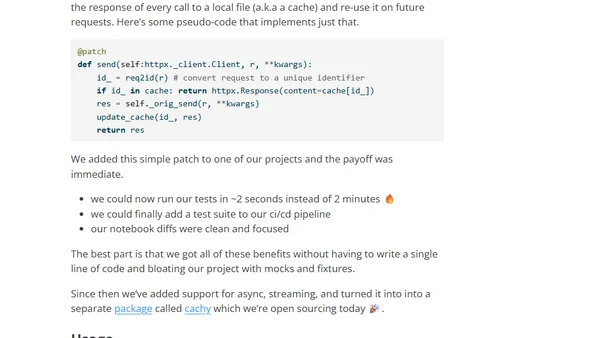

Read OriginalThe article details how AnswerAI created Cachy, a Python package that patches the httpx library to automatically cache responses from LLM providers like OpenAI and Anthropic. This eliminates slow, non-deterministic LLM calls in tests and development, making notebooks 60x faster, enabling CI/CD integration, and producing cleaner code diffs without manual mocking.

Comments

No comments yet

Be the first to share your thoughts!

Browser Extension

Get instant access to AllDevBlogs from your browser

Top of the Week

1

2

Better react-hook-form Smart Form Components

Maarten Hus

•

2 votes

3

AGI, ASI, A*I – Do we have all we need to get there?

John D. Cook

•

1 votes

4

Quoting Thariq Shihipar

Simon Willison

•

1 votes

5

Dew Drop – January 15, 2026 (#4583)

Alvin Ashcraft

•

1 votes

6

Using Browser Apis In React Practical Guide

Jivbcoop

•

1 votes